Here are three real examples of what happens when enterprise SEO goes wrong – and how you can safeguard large websites from costly mistakes.

Enterprise SEO plays by different rules.

Strategies that may work for small or niche websites won’t always work at scale.

So what exactly can happen when enterprise SEO gets too big?

In this article, I’ll share three real-life examples. Then, you’ll learn a potential antidote for more efficient management of SEO at scale.

Facing the indexing dilemma

Small sites tend to grow one page at a time, using keywords as the building blocks of an SEO strategy.

Large sites often adopt more sophisticated approaches, leaning heavily on systems, rules and automation.

It’s critical to align SEO with business goals. Measuring SEO success based on keyword rankings or traffic leads to negative consequences due to over-indexing.

There isn’t a magic formula to determine the optimal number of indexed URLs. Google does not set an upper limit.

A good starting point, however, is to consider the overall health of the SEO funnel. If a site…

- Pushes tens or hundreds of millions, or even billions of URLs to Google

- Ranks only for a few million keywords

- Receives visits to a few thousand pages

- Converts a fraction of these (if any at all)

…then it’s a good indication that you need to address some serious SEO health needs.

Fixing any site hygiene issues now should prevent even bigger SEO problems later.

Let’s look at three real-life enterprise SEO examples that illustrate why this is so important.

Case 1: Consequences of over-indexing low-quality content

Google has limited resources for web crawling and processing. They prioritize content that is valuable to users.

Google might crawl, but not index, pages it considers thin, duplicate or low quality.

If it’s only a few pages, it’s not a problem. But if it’s widespread, Google might ignore entire page types or most of the site’s content.

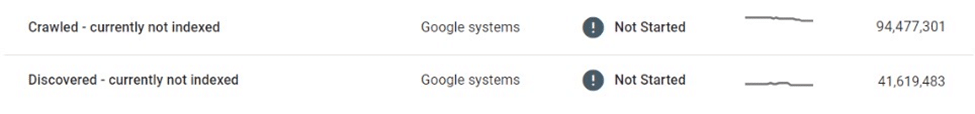

In one instance, an ecommerce marketplace found that tens of millions of its listing pages were impacted by selective crawling and indexing.

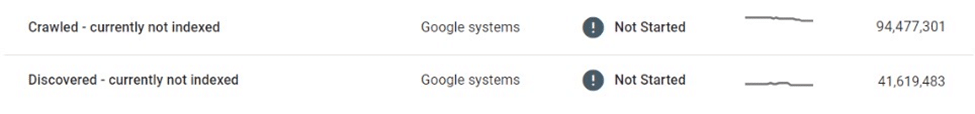

After crawling millions of thin, near-duplicate listings pages and not indexing them, Google eventually scaled back on crawling the website altogether, leaving many in “Discovered – currently not indexed” limbo.

This marketplace relied heavily on search engines to promote new listings to users. New content was no longer being discovered, which posed a significant business challenge.

Some immediate measures were taken, such as improving internal linking and deploying dynamic XML sitemaps. Ultimately, these attempts were futile.

The real solution required controlling the volume and quality of indexable content.

Case 2: Unforeseen consequences of crawling cease

When crawling stops, unwanted content will remain in Google’s index – even if it’s changed, redirected or deleted.

Many websites use redirects instead of 404 errors for removed content to maintain authority. This tactic can squeeze additional traffic from ghost pages for months, if not years.

However, this can sometimes go horribly wrong.

For example, a well-known global marketplace that sells hand-crafted goods accidentally revealed sellers’ private information (e.g., name, address, email, phone number) on localized versions of their listings pages. Some of these pages were indexed and cached by Google, displaying personally Identifiable Information (PII) in search results, compromising user safety and privacy.

Because Google didn’t re-crawl these pages, removing or updating them wouldn’t eliminate them from the index. Even months after deletion, cached content and user PII data continued to exist in Google’s index.

In a situation like this, it was the responsibility of the marketplace to fix the bugs and work directly with Google to remove sensitive content from Search.

Case 3: The risks of over-indexing search results pages

Uncontrolled indexing of large volumes of thin, low-quality pages can backfire – but what about indexing search result pages?

Google doesn’t endorse indexing of internal search results, and many seasoned SEOs would strongly advise against this tactic. However, many large sites have leaned heavily on internal search as their principal SEO driver, often yielding substantial returns.

If user engagement metrics, page experience, and content quality are high enough, Google can turn a blind eye. In fact, there is enough evidence to suggest that Google might even prefer a high-quality internal search result page to a thin listing page.

However, this strategy can go wrong as well.

I once saw a local auction site lose a significant portion of its search page rankings – and more than a third of its SEO traffic – overnight.

The 20/80 rule applies in that a small portion of head terms accounts for most SEO visits to indexed search results. However, it’s often the long tail that makes up the lion’s share of the URL volume and boasts some of the highest conversion rates.

As a result, of the sites that utilize this tactic, few impose hard limits or rules on indexing of search pages.

This poses two major problems:

- Any search query can generate a valid page, which means an infinite number of pages could be autogenerated.

- All of them are indexable in Google.

In a case of a classifieds marketplace that monetized its search pages with third-party ads, this vulnerability was well exploited through a form of ad arbitrage:

- An enormous number of search URLs were generated for shady, adult and entirely illicit terms.

- While these autogenerated pages returned no actual inventory results, they served third-party ads, and were optimized to rank for requested search queries through page template and metadata.

- Backlinks were built to these pages from low-quality forums to get them discovered and crawled by bots.

- Users who landed on these pages from Google would click on the third-party ads and proceed to the low-quality sites that were the intended destination.

By the time the scheme was discovered, the site’s overall reputation had been damaged. It was also hit by several penalties and sustained massive declines in SEO performance.

Embracing managed indexing

How could these issues have been avoided?

One of the best ways for large enterprise sites to thrive in SEO is by scaling down through managed indexing.

For a site of tens or hundreds of millions of pages, it’s crucial to move beyond a keyword-focused approach to one driven by data, rules and automation.

Data-driven indexing

One significant advantage of large sites is the wealth of internal search data at their disposal.

Instead of relying on external tools, they can utilize this data to understand regional and seasonal search demand and trends at a granular level.

This data, when mapped to existing content inventory, can provide a robust guide for what content to index, as well as when and where to do it.

Deduplicate and consolidate

A small number of authoritative, high-ranking URLs is far more valuable than a large volume of pages scattered throughout the top 100.

It is worthwhile to consolidate similar pages using canonicals, leveraging rules and automation to do so. Some pages might be consolidated based on similarity scores, others – clustered together if they collectively rank for similar queries.

The key here is experimentation. Tweak the logic and revise thresholds over time.

Clean up thin and empty content pages

When present in massive volumes, thin and empty pages can cause significant damage to site hygiene and performance.

If it’s too challenging to improve them with valuable content or to consolidate, then they should be noindexed or even disallowed.

Reduce infinite spaces with robots.txt

Fifteen years after Google first wrote about “infinite spaces,” the issue of overindexing of filters, sorting and other combinations of parameters continues to plague many ecommerce sites.

In extreme cases, crawlers can crash servers as they try to make their way through these links. Thankfully, this can be easily addressed through robots.txt.

Client-side rendering

Using client-side rendering for certain on-page components that you don’t want indexed by search engines, might be an option. Consider this carefully.

Better yet, these components should be inaccessible for logged-out users.

Stakes rise dramatically as scale increases

While SEO is often perceived as a “free” source of traffic, this is somewhat misleading. It costs money to host and serve content.

Costs might be negligible per URL, but once the scale reaches hundreds of millions or billions of pages, pennies start to add up to real numbers.

Although ROI of SEO is tricky to measure, a penny saved is a penny made, and cost savings through managed crawling and indexing should be one factor when considering indexing strategies for large sites.

A pragmatic approach to SEO – with well-managed crawling and indexing, guided by data, rules and automation – can protect large websites from costly mistakes.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories

New on Search Engine Land

@media screen and (min-width: 800px) {

#div-gpt-ad-3191538-7 {

display: flex !important;

justify-content: center !important;

align-items: center !important;

min-width:770px;

min-height:260px;

}

}

@media screen and (min-width: 1279px) {

#div-gpt-ad-3191538-7 {

display: flex !important;

justify-content: center !important;

align-items: center !important;

min-width:800px!important;

min-height:440px!important;

}

}

googletag.cmd.push(function() { googletag.display(‘div-gpt-ad-3191538-7’); });

–>

Original Source: How to improve enterprise-level crawling and indexing efficiency