After analyzing a Google patent related to PAA and PASF, I started reviewing other recently-granted patents. And it wasn’t long before I surfaced another very interesting one regarding the use of machine learning models. The patent I just analyzed focuses on using and/or generating a machine learning model in response to a query (when Google needs to predict an answer since the standard search results could not provide an adequate answer). After reading the patent multiple times, it underscored how sophisticated Google’s systems could be when needing to provide a quality answer (or prediction) for users.

Like with any patent, we never know if Google actually implemented what the patent covers, but it’s always possible. And if it was implemented, not only could Google be utilizing a trained machine learning model to help predict an answer to a query, but it can index those machine learning models, associate them with various entities, webpages, etc., and then retrieve and use those models for subsequent related searches. Think about how powerful and scalable that can be for Google.

In addition, the patent explains that Google can return an interactive interface to the machine learning model in the search results, which enables users to add parameters which can be used to generate a prediction for queries when the search results aren’t sufficient. That part of the patent had me thinking about the message Google rolled out in the SERPs in April of 2020 when there aren’t quality search results being returned for a query. The current implementation doesn’t provide a form for users to interact with, but it sure could at some point. And maybe that interface could be used for more queries in the future versus just the more obscure ones it surfaces for now. I’ll cover more about this in the bullets below.

Key points from the patent:

Similar to my last post covering a recent Google patent, I think the best way to cover the details is to provide bullets of the key points.

Generating and/or Utilizing a machine learning model in response to a search request

US 11645277 B2

Date Granted: May 9, 2023

Date Filed: December 12, 2017

Assignee Name: Google LLC

1. Google’s patent explains that if an answer cannot be located with certainty, and the user submits a request that is predictive in nature, a trained machine learning model can be used to generate a prediction.

2. For example, Google could first generate search results based on a query, but if the results aren’t of sufficient quality, then a machine learning model can be used to provide a stronger predicted answer. So, the system can provide predicted answers based on a machine learning model when an answer cannot be validated by Google.

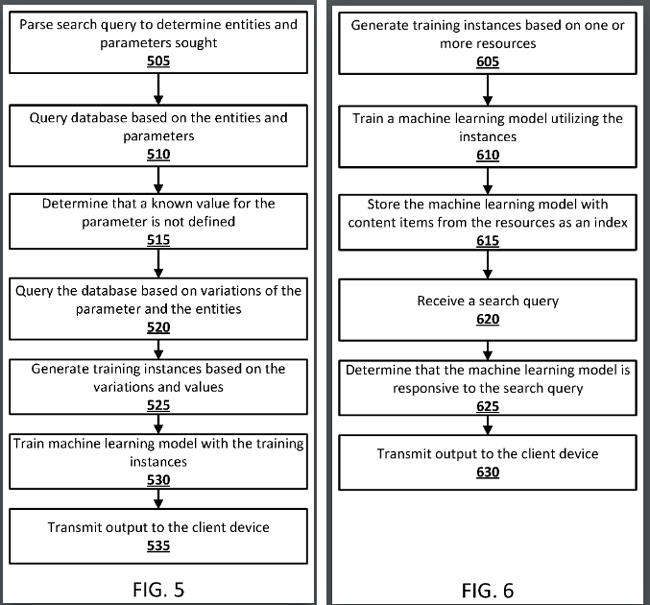

3. Also, the machine learning model can be generated “on the fly”, and Google might store trained machine learning models in a search index. Yes, Google could index machine learning models that were just trained to provide predictions based on specific types of queries. I’ll cover more about this soon.

4. The patent provided an example based on the query, “How many doctors will there be in China in 2050?” If an authoritative answer cannot be provided via the standard search results, then the query can be passed to a trained machine learning model to generate a prediction.

5. The patent goes on to explain that the system might take other years like 2010, 2015, 2020, etc. and use those to generate a prediction (via a machine learning model trained on those parameters).

6. The patent explains that trained machine learning models can be indexed by one or more content items from “resources utilized to train the model”. And for future queries, when the system identifies parameters that are related to a machine learning model (e.g. if a subsequent user asks a related question like, “How many doctors where there be in China in 2040?”), the machine learning model could be used to generate a prediction.

7. The patent goes on to explain that the machine learning models could be stored with one or more content items, like entities in a knowledge graph, table names, column names, webpage names, and more. In addition, words associated with the query like “China” and “doctors” could be used by the machine learning model to generate a prediction.

8. The patent goes on to explain that the system might provide an interactive interface for users to select parameters that can be passed to the machine learning model. That can be a text field, a dropdown menu, etc. Also, the response could include a message presented to the user that the response is a prediction based on a trained machine learning model. So Google wants to make sure users understand it’s a prediction based on a machine learning model versus answers provided based on data it has indexed.

9. The trained model can then be validated to ensure the predictions are of at least a “threshold quality”. Anything below a certain threshold can be suppressed and not provided to the user. In that case, the standard search results can be displayed instead.

10. Beyond public search results, the patent explains that the system could be used on a private database to help companies predict certain outcomes. The patent explains, “private to a group of users, a corporation, and/or other restricted sets.” For example, an employee of an amusement park might ask, “how many snow cones will we sell tomorrow?” The system could then query a private database to understand sales of previous days, weather information, attendance data, etc., to predict an answer for the employee.

11. The patent explains that the system could provide push notifications from an “automated assistant” at some point. And just thinking out loud, I’m wondering if that could be from a Jarvis-like assistant like I explained in my post about Google’s Code Red that triggered thousands of Code Reds at publishers.

12. From a latency standpoint, the patent explains that there could be a delay after a user submits a query. When that happens, the standard search results could be initially displayed along with a message that “good” results are not available for the query and that a machine learning model is being used to generate a prediction. In those situations, the system could push that prediction to the user at a later time or provide a hyperlink for users to click to view the machine learning output.

13. Also, the patent says for some situations that the user would have to affirm the prompt in order for the process to continue. For example, the system might provide a message stating, “A good answer is not available. Do you want me to predict an answer for you?” Then the machine learning model would be trained only if affirmative user input is received in response to the prompt. Like I explained earlier, I see a connection with the “There aren’t great matches for your search” message that rolled out in April of 2020. I’m wondering if that could expand to utilize this model in the future…

Summary: Google could be predicting quality answers in a powerful and super-efficient way via (indexed) machine learning models.

Although we don’t know if any specific patent is being used, the power and efficiency of this process makes a lot of sense for Google. From generating machine learning models “on the fly” to indexing those models for future use to utilizing an interactive interface with push notifications, Google seems to be setting the stage for an assistant like Jarvis. So, the next time you ask Google to predict an answer, think about this patent. And you might just be prompted for more information at some point (until Jarvis can do all of this in a nanosecond). 🙂

GG

![Jarvis Rising – How Google could generate a machine learning model “on the fly” to predict answers when Search can’t, and how it could index those models to predict answers for future queries [Patent]](https://jazzkarate.com/wp-content/uploads/2023/07/patent-ml-models-google-sm.jpg)